I defended my PhD thesis, Manifest Contracts on November 7th, 2013, with the final document submitted on December 6th. Since the doctoral degree shows up on my Penn transcript, I feel comfortable telling the world: I got a PhD! My thesis committee, comprising Stephanie Weirich (the chair), Rajeev Alur, Greg Morrisett, and Steve Zdancewic. Here’s the abstract:

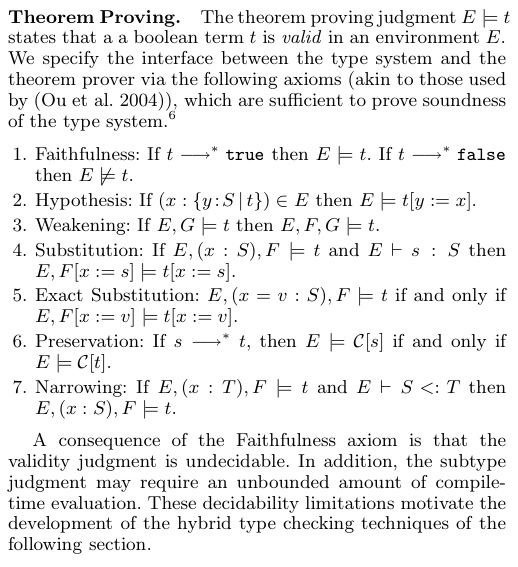

Eiffel popularized design by contract, a software design philosophy where programmers specify the requirements and guarantees of functions via executable pre- and post-conditions written in code. Findler and Felleisen brought contracts to higher-order programming, inspiring the PLT Racket implementation of contracts. Existing approaches for runtime checking lack reasoning principles and stop short of their full potential—most Racket contracts check only simple types. Moreover, the standard algorithm for higher-order contract checking can lead to unbounded space consumption and can destroy tail recursion. In this dissertation, I develop so-called manifest contract systems which integrate more coherently in the type system, and relate them to Findler-and-Felleisen-style latent contracts. I extend a manifest system with type abstraction and relational parametricity, and also show how to integrate dynamic types and contracts in a space efficient way, i.e., in a way that doesn’t destroy tail recursion. I put manifest contracts on a firm type-theoretic footing, showing that they support extensions necessary for real programming. Developing these principles is the first step in designing and implementing higher-order languages with contracts and refinement types.

I’ll be starting as a post-doc with Dave Walker on Monday.