If you read a description of a static analysis in a paper, what might you find? There’ll be some cute model of a language. Maybe some inference rules describing the analysis itself, but those rules probably rely on a variety of helper functions. These days, the analysis likely involves some logical reasoning: about the terms in the language, the branches conditionals might take, and so on.

What makes a language good for implementing such an analysis? You’d want a variety of features:

- Algebraic data types to model the language AST.

- Logic programming for cleanly specifying inference rules.

- Pure functional code for writing the helper functions.

- An SMT solver for answering logical queries.

Aaron Bembenek, Steve Chong, and I have developed a design that hits the sweet spot of those four points: given Datalog as a core, you add constructors, pure ML, and a type-safe interface to SMT. If you set things up just right, the system is a powerful and ergonomic way to write static analyses.

Formulog is our prototype implementation of our design; our paper on Formulog and its design was just conditionally accepted to OOPSLA 2020. To give a sense of why I’m excited, let me excerpt from our simple liquid type checker. Weighing in under 400 very short lines, it’s a nice showcase of how expressive Formulog is. (Our paper discusses substantially more complex examples.)

type base =

| base_bool

type typ =

| typ_tvar(tvar)

| typ_fun(var, typ, typ)

| typ_forall(tvar, typ)

| typ_ref(var, base, exp)

and exp =

| exp_var(var)

| exp_bool(bool)

| exp_op(op)

| exp_lam(var, typ, exp)

| exp_tlam(tvar, exp)

| exp_app(exp, exp)

| exp_tapp(exp, typ)

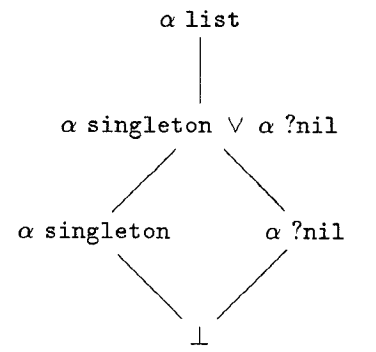

ADTs let you define your AST in a straightforward way. Here, bool is our only base type, but we could add more. Let’s look at some of the inference rules:

(* subtyping *)

output sub(ctx, typ, typ)

(* bidirectional typing rules *)

output synth(ctx, exp, typ)

output check(ctx, exp, typ)

(* subtyping between refinement types is implication *)

sub(G, typ_ref(X, B, E1), typ_ref(Y, B, E2)) :-

wf_ctx(G),

exp_subst(Y, exp_var(X), E2) = E2prime,

encode_ctx(G, PhiG),

encode_exp(E1, Phi1),

encode_exp(E2prime, Phi2),

is_valid(`PhiG /\ Phi1 ==> Phi2`).

(* lambda and application synth rules *)

synth(G, exp_lam(X, T1, E), T) :-

wf_typ(G, T1),

synth(ctx_var(G, X, T1), E, T2),

typ_fun(X, T1, T2) = T.

synth(G, exp_app(E1, E2), T) :-

synth(G, E1, typ_fun(X, T1, T2)),

check(G, E2, T1),

typ_subst(X, E2, T2) = T.

(* the only checking rule *)

check(G, E, T) :-

synth(G, E, Tprime),

sub(G, Tprime, T).

First, we declare our relations—that is, the (typed) inference rules we’ll be using. We show the most interesting case of subtyping: refinement implication. Several helper relations (wf_ctx, encode_*) and helper functions (exp_subst) patch things together. The typing rules below follow a similar pattern, mixing the synth and check bidirectional typing relations with calls to helper functions like typ_subst.

fun exp_subst(X: var, E : exp, Etgt : exp) : exp =

match Etgt with

| exp_var(Y) => if X = Y then E else Etgt

| exp_bool(_) => Etgt

| exp_op(_) => Etgt

| exp_lam(Y, Tlam, Elam) =>

let Yfresh =

fresh_for(Y, X::append(typ_freevars(Tlam), exp_freevars(Elam)))

in

let Elamfresh =

if Y = Yfresh

then Elam

else exp_subst(Y, exp_var(Yfresh), Elam)

in

exp_lam(Yfresh,

typ_subst(X, E, Tlam),

Elamfresh)

| exp_tlam(A, Etlam) =>

exp_tlam(A, exp_subst(X, E, Etlam))

| exp_app(E1, E2) =>

exp_app(exp_subst(X, E, E1), exp_subst(X, E, E2))

| exp_tapp(Etapp, T) =>

exp_tapp(exp_subst(X, E, Etapp), typ_subst(X, E, T))

end

Expression substitution might be boring, but it shows the ML fragment well enough. It’s more or less the usual ML, though functions need to have pure interfaces, and we have a few restrictions in place to keep typing simple in our prototype.

There’s lots of fun stuff that doesn’t make it into this example: not only can relations call functions, but functions can examine relations (so long as everything is stratified). Hiding inside fresh_for is a clever approach to name generation that guarantees freshness… but is also deterministic and won’t interfere with parallel execution. The draft paper has more substantial examples.

We’re not the first to combine logic programming and SMT. What makes our design a sweet spot is that it doesn’t let SMT get in the way of Datalog’s straightforward and powerful execution model. Datalog execution is readily parallelizable; the magic sets transformation can turn Datalog’s exhaustive, bottom-up search into a goal-directed one. It’s not news that Datalog can turn these tricks—Yiannis Smaragdakis has been saying it for years!—but integrating Datalog cleanly with ML functions and SMT is new. Check out the draft paper for a detailed related work comparison. While our design is, in the end, not so complicated, getting there was hard.

Relatedly, we have also have an extended abstract at ICLP 2020, detailing some experiments in using incremental solving modes from Formulog. You might worry that Datalog’s BFS (or heuristic) strategy wouldn’t work with an SMT solver’s push/pop (i.e., DFS) assertion stack—but a few implementation tricks and check-sat-assuming indeed provide speedups.