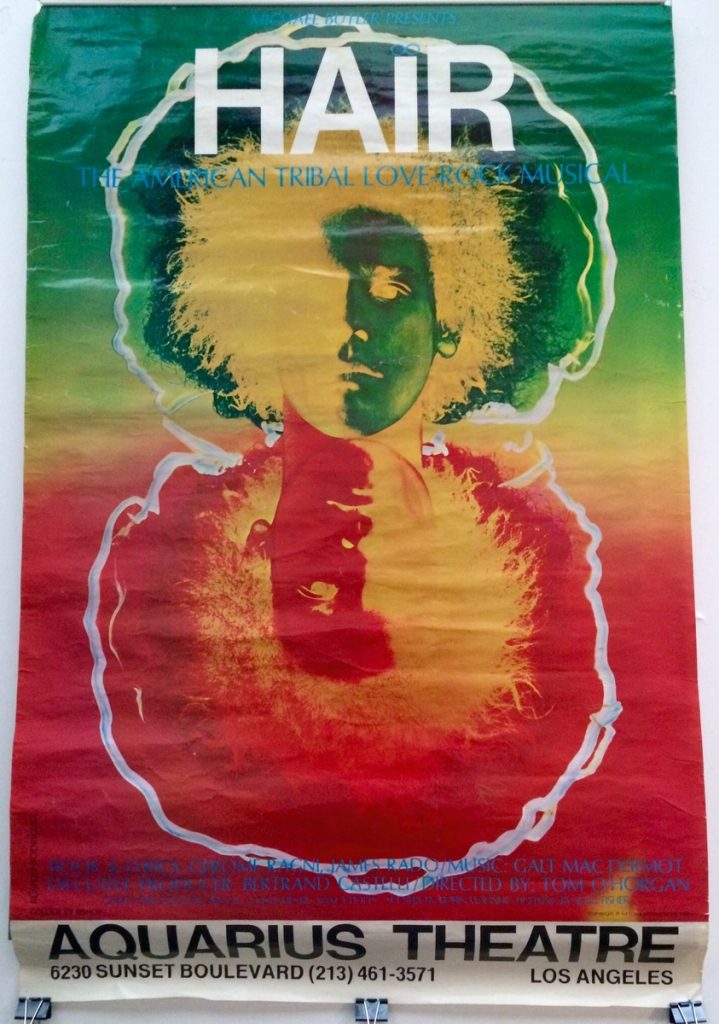

I want to believe in a future where the lion will lie down with the lamb; we’ll beat our swords into plowshares; and developers will migrate dynamic prototypes to robust static systems with confidence. But these Aquarian visions are elusive. Having a map of the road to paradise in theory doesn’t mean we know how to get there in practice. Let me tell you about two papers at OOPSLA that shuffle us a few steps forward on this long pilgrim’s trail.

Migrating programs

How do you actually get a program from Scheme into ML? Or from JavaScript into TypeScript? The theory of gradual typing goes far beyond these pedestrian questions. In principle, we know how to reconcile dynamism with much more complex systems, like information flow or refinement types or effect systems. But there’s very little tooling to support moving any particular Scheme program into ML. (If your program is a Racket program, then you’re in some luck.)

People have studied program migration before, under a variety of names. Papers go back at least to 2009, arguably even earlier. There are lots of different approaches, and most comprise some form of type inference and custom constraint solving—complex! Worse still, there’s been no consensus on how to evaluate these systems. Luna Phipps-Costin, Carolyn Jane Anderson, me, and Arjun Guha dug into program migration. Our paper, “Solver-based Gradual Type Migration”, tries to build a map of the known territory so far:

- There are competing desiderata: maximal type precision, compatibility with code at different types, and preserving the existing semantics of your program, i.e., safety.

- We evaluate a variety of past techniques on prior benchmarks, and we devise a novel set of “challenge” problems. Our evaluation framework is robust, and you could plug in other approaches to type migration and evaluate them easily.

- We introduce a new, very simple approach to type migration, which we call TypeWhich. TypeWhich uses an off-the-shelf SMT solver. You can choose how compatible/precise you want it to be, but it’ll always be safe.

I’m excited about each of these contributions, each for its own reason.

For (1), I’m excited to formally explain that what you’re actually trying to do with your code matters. “Gradual typing” sensu lato is pretty latus indeed. Are you migrating a closed system, module by module? Or are you coming up with type annotations for a library that might well be called by untyped clients? These are very different scenarios, and you probably want your type migration algorithm to do different things! Bringing in these competing concerns—precision, compatibility, and safety—gives researchers a way to contextualize their approaches to type migration. (All that said, to me, safety is paramount. I’m not at all interested in a type migration that takes a dynamic program that runs correctly on some input and produces a statically typed program that fails on the same input… or won’t even compile! That doesn’t sound very gradual to me.)

For (2), I’m excited to be building a platform for other researchers. To be clear, there’s a long way to go. Our challenge problems are tiny toys. There’s a lot more to do here.

For (3), I’m excited to have an opportunity to simplify things. The TypeWhich constraint generator is simple, classic PL; the constraints it generates for SMT are straightforward; the models that SMT generates are easy to understand. It’s a cool approach!

One tiny final note: Luna has done a tremendous amount of incredibly high quality work on this project, both in code and concept. She’s just now starting her third-year of undergraduate study. So: watch out! You ain’t ready.

Typed functional programming isn’t about functions

If there’s a single defining ‘killer’ feature of typed functional programming, it isn’t first-class functions at all: it’s algebraic datatypes. Algebraic datatypes help make illegal states unrepresentable and ASTs easy to work with. They’re a powerful tool, and their uptake in a variety of new-hotness languages (Kotlin, Rust, Swift) speaks to their broad appeal.

Moving Scheme code to ML is an old goal, and it’s the bread and butter of the introductory sections of gradual typing papers. But are we any closer than we were fifteen years ago? (I’d say “yes”, and point at Typed Racket, or “nobody knows what’s happening anyway” and point at Idris’s Chez Scheme runtime.)

Stefan Malewski, me, and Éric Tanter tried to figure out how algebraic datatypes play with dynamic features. Our paper, “Gradually Structured Data“, uses AGT to ‘compute’ static and dynamic semantics for a language with possibly open algebraic datatypes and the unknown type in a few flavors (?, the unknown type; a new ground type for “datatype”, the same way int and bool and ?->? are ground; and a new type for “any open datatype”). The features gel in a nice way, letting us express some cool behaviors (see Section 2 for how one might evolve a simple JSON API) and sit in a novel space (see Section 5 for a thorough comparison to related features).

I’m particularly pleased that we’ve found a new place in the design spectrum (per our feature chart in Section 5) that seems to support incremental program migration (per our examples in Section 2)—and it’s formally grounded (by using AGT in the middle, formal sections).

This paper came out of conversations with Éric after my screed about gradual typing’s two lineages at SNAPL (see also my followup blogpost, “What to Define When You’re Defining Gradual Type Systems”). There’s plenty more to do: what about separate compilation? What are the right representation choices? How should runtime checks really go, and how can programmers control the costs?

I remember a question I was asked after giving the talk for “Contracts Made Manifest” at POPL 2010 with some panic fondly. That paper compares the latent approach to contracts in Racket-then-Scheme (well structured runtime checks at module boundaries) to the manifest approach (runtime checks are a form of type coercion, occurring anywhere) in the emerging refinement types literature (Sage, Liquid Types, etc.). I had shown that the two aren’t equivalent in the presence of dependency, and I concluded by talking about how the two implementation approaches differed. So: somebody asked, “Which approach should you use?” To be honest, I had hardly even thought about it.

So, suppose you wanted use algebraic datatypes and dynamic features today: which language should you use? I’ve thought about it, and the answer, sadly, is, “It depends”. OCaml’s polymorphic variants get you a long way; Haskell’s Dynamic could work great, but it’s badly in need of usable surface syntax. (I’ve tried to get Richard Eisenberg to help me with the fancy work to make that happen, but he’s justifiably worried that the Haskell community would run him out of town.) Scala, Haskell, and OCaml are your best bets if you want true algebraic datatypes. If you’re more relaxed about things, Typed Racket or TypeScript could work well for you. If what you’re looking for is a type system expressive enough to capture interesting dynamic idioms, then I think there’s a clear choice: CDuce. Ever since un bel recensore anonimo at SNAPL 2019 showed me that CDuce can type flatten, I’ve been impressed. Check this out:

let flatten ( Any -> [ (Any\[Any*])* ] ) (* returns a list of non-lists ???? *)

| [] -> [] (* nil *)

| (h,t) -> (flatten h)@(flatten t) (* cons *)

| x -> [x] (* anything else *)Look at that type! In just a few lines of CDuce, we can show that flatten produces not just a list of elements, but a list of things that are not themselves lists. The price here is that CDuce’s types are set-theoretic, which means things are a touch different from what people are used to in OCaml or Haskell. But if you’re okay with that, CDuce is a serious contender!

Coda: see you at OOPSLA?

I’m planning on going to OOPSLA 2021 in Chicago, given the twoopsla and the opportunity to present a paper from OOPSLA 2020, “Formulog: Datalog for SMT-based static analysis”, with Aaron Bembenek and Steve Chong. I’ve already blogged about it, but I’m excited to get to give an in-person version of the talk, too. You can still watch Aaron’s excellent recorded talk on YouTube and enjoy the cabin vibes. There won’t be cabin vibes at my OOPSLA 2020 talk, but there will be terrible jokes. So: think about it. Will I see you at OOPSLA? I hope so!